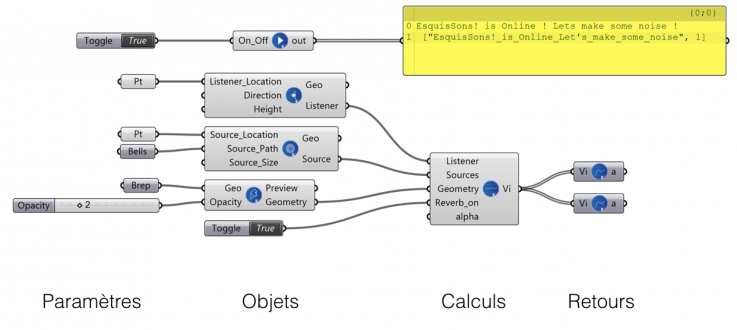

Esquis’Sons! is based on the Grasshopper plugin that defines the spatial context. It allows to assign to this context acoustic characteristics (level of porosity of volumes and faces) and to position a point of listening anywhere in this modeled space. We then adjust the qualities, the origin of the sound environments of the scene. Up to 10 localized sources can be added. The geometric pattern of space sounds to the ear of designers and is ready to be tested, experimented, created again … by sounds!

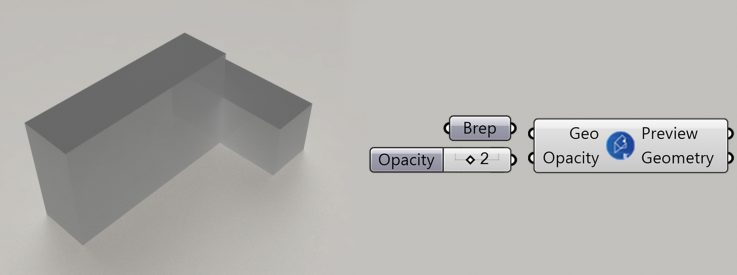

1. Define a spatial context

At first, we rely on an architectural and urban morphological « context » in Rhinoceros3D via Grasshopper through numerical parameters that all remain permanently variable. These morphologies are then associated with « objects » of the Esquissons plugin! which allow to associate a porosity (« opacity »). Any geometry, simple or complex can be used to feed the spatial and the sound scene.

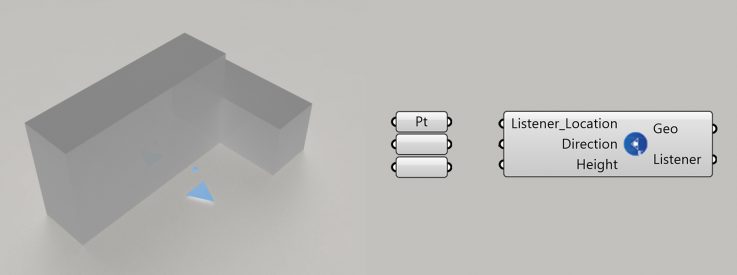

2. Set the listening point

We associate a point in the model with the position of the listening point from which will be real-time calculated the auralization. We then define its size as well as its orientation. By moving the point in the model, we move the listening point in Esquis’Sons! application and modify the sound scene heard.

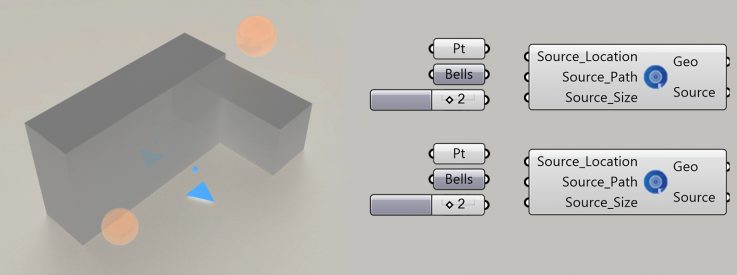

3. Build the sound stage

In a second step, it is about building a sound scene by defining sources associated with points (« source_location ») in the rhinoceros / grasshopper 3D model, but also with sound files on the hard disk (« source_path ») , and finally to a size (« source_size ») in space (which is not synonymous with intensity).

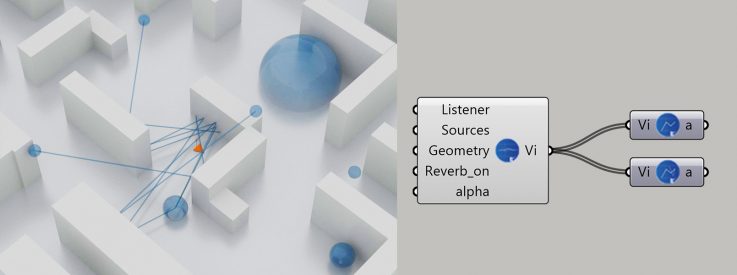

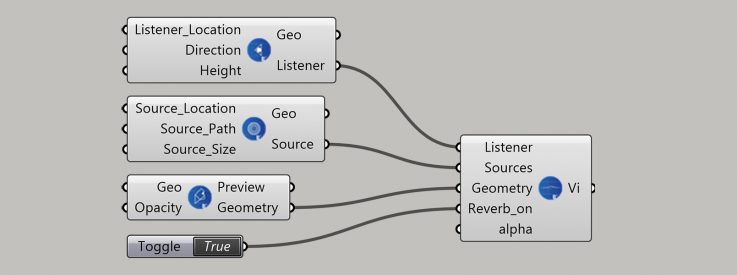

4. The main motor allows the auralization

By connecting these different objects to the main sketch motor, we obtain a sound result. We can then activate or not the reverb taking into account the modeled space (beta version still in test)

5. – Listen – Compare – Listen – Change – Listen – Create

Thus any modification on the space built in the spatial model is audible live thanks to the application Esquis’sons!

6. To go further: Visualize first and second reflexions

It is also possible to visualize the first and second reflections arriving at the listening point thanks to the “vi” output. It gives visuals answers to acoustic differences in the listening.